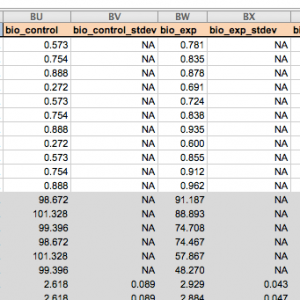

My spirits sank a bit more as I typed yet another NA into a “Standard Deviation” column in my spreadsheet. I was retrieving data from algae growth experiments in dozens of scientific papers, and the portion of papers that did not report standard deviations (or provide enough data for me to calculate them) was staggering. Sinking my spirits further, many of the papers did not report the number of experimental replicates they tested (in my case, the number of algae cultures they grew).

Without this data, I wouldn’t be able to use proper methods when conducting a meta-analysis. Meta-analyses are quantitative summaries of previously published data on a given topic, so that broader trends or conclusions can be deciphered that could not be determined from a single publication alone. When analyzing data from many different studies, proper or traditional meta-analysis methods require each study to be weighted based on its standard deviation and number of replicates. Studies with lower standard deviations and more replicates are given more weight because of their greater precision. However, if these values are not provided in studies, their weights cannot be calculated.

For the meta-analysis I performed, I ended up extracting data from 518 experiments. About 77% on average did not have standard deviations for their growth data. On top of that, in roughly 100 of these experiments it was unclear how many experimental replicates were tested, and many likely didn’t have multiple replicates at all. Excluding these studies from my analyses would get rid of too much data, though. I therefore sank deeper into searching for alternative meta-analysis methods.

I found I was not alone. In 1999, professors Jessica Gurevitch and Larry Hedges published the paper Statistical Issues in Ecological Meta-analyses. They wrote, “Ecological experiments commonly fail to report sample sizes and variances … which makes it impossible to include those studies in a meta-analysis that uses the standard (weighted) parametric statistical tests designed for meta-analysis. The obvious solution is to upgrade publication standards, alerting authors, reviewers, and editors so that papers are not published without the basic information necessary for readers to properly evaluate the results.” This paper led me to the alternative methods I used to analyze my data. But it also alerted me to a scientific reporting issue that was deeper than I’d realized.

Even after 17 years, this “obvious solution” the authors recommended (which they admitted was a “lofty aspiration”) seems to not have been implemented – or working. Most of the experimental data I used was published within the past 6 years, in both biotechnological and ecological journals, yet still much of it lacks necessary information like sample sizes and variances.

In 2016, multiple authors (including Jessica Gurevtich) addressed this issue in the relevant opinion paper Transparency in Ecology and Evolution: Real Problems, Real Solutions, which I recommend. Parker et al. describe specific practices that lead to irreproducible and non-transparent research results, which some researchers may undertake without ill intentions. This non-transparency severely limits the ability of other researchers to use the published data in a meta-analysis, among other consequences.

Moreover, the authors outline steps to improve transparency and reporting in scientific research. These steps are more likely to be effective if they emerge from the very institutions and journals that have created the pressures to publish novel and significant results. Parker et al. cite the Transparency and Openness Promotion (TOP) framework, which offers rules that can be implemented by scientific journals and funding agencies. Some of these rules include sharing of data, code and materials.

A more advanced TOP rule is that of preregistration, where researchers register their study methods and analysis plans prior to conducting the research. This rule could reduce publication bias (where publications are biased towards reporting significant results) and would also allow readers to distinguish between planned analyses and exploratory analyses (since exploratory analyses may lead to significant results by chance). To encourage preregistration, the Center for Open Science is even awarding $1,000 prizes to researchers that preregister and publish their research within the next couple of years.

Lastly, the TOP rules encourage the submission of replication studies, which re-test a research question posed in a previous study. Replication is an important aspect of the scientific process, yet replication studies are often not undertaken or funded because they are inherently non-novel.

Being frustrated with other studies’ insufficiencies in data reporting led me to improve my own thoroughness and awareness of scientific transparency. With TOP guidelines available and journals being more stringent about reporting requirements, hopefully it will not be 17 more years for the issue to require another overhaul.

Great post, Sarah. Keep up the excellent work!

I’ve faced the same problem as you and would encourage you to find a way around discarding data that don’t have SD or n. Many methods for including such data are in the books from your post.

I used one approach in LeBauer et al 2013 [1] that I think came from the Koricheva “Handbook Handbook of meta analysis in ecology and evolution”. A basic semi reproducible example of the analysis is in [2].

The Koricheva book also has many methods for estimating SD from other statistics. And I’ve written out a few (with some overlap) here [3].

I hope one day open data will make meta analysis obsolete! Until then…

[1]

https://github.com/PecanProject/pecan/blob/master/documentation/lebauer2013ffb.pdf

[2] https://github.com/PecanProject/pecan/blob/master/modules/meta.analysis/vignettes/single.MA_demo.Rmd

[3] https://www.authorea.com/users/5574/articles/6811/_show_article

Hi David- thank you for the comments and for sharing these sources! I estimated standard deviations using methods from the Koricheva et al. book like you mentioned, but ultimately decided to use the resampling methods outlined in Adams et al. (http://onlinelibrary.wiley.com/doi/10.1890/0012-9658(1997)078%5B1277:RTFMAO%5D2.0.CO;2/full). This also allowed me to use all data even without standard deviations or sample sizes.

You may also find that the standard deviation and standard error are confused in the literature. Many researchers do not really understand the distinction and I’ve seen numerous instances where I’m fairly sure they were reported, and discussed, incorrectly.